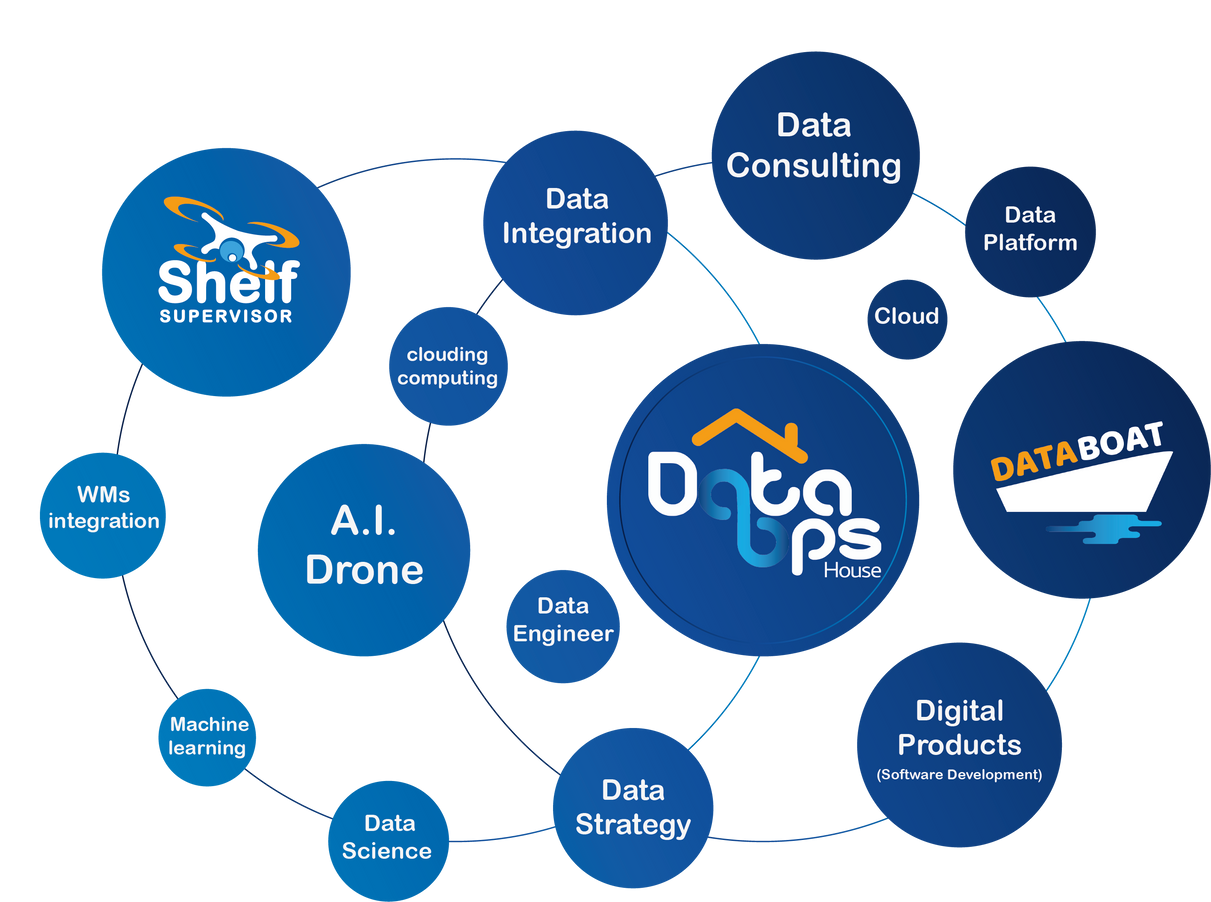

Cloud & Data Experts

Your Data-Driven Journey: From Strategy to Value

Our Clients

Partnership

Who we are

DataOps House is a data boutique consultancy that empowers companies to make better decisions. With our data products we democratize data in an efficient, holistic and sustainable manner.

Let's really execute your data strategy!

Blog

Loading data...